- Published on

MIT’s SoftMimic Framework Teaches Humanoids to Be Compliant, Not Just Capable

Humanoid robots are becoming increasingly adept at imitating complex human motions. But a critical problem often hides behind impressive demos: most are incredibly stiff.

When a robot trained with standard imitation learning methods makes an unexpected contact—like brushing against a table, misjudging an object's location, or interacting with a person—it typically treats that deviation as an error to be corrected "aggressively". This can result in "large, uncontrolled forces," leading to brittle and potentially dangerous behavior.

A new framework from MIT’s Improbable AI Lab, called SoftMimic, aims to solve this by teaching humanoid robots to be compliant, absorbing unexpected forces and interacting gently with the world.

Learning to Be Soft

The core problem, according to the MIT researchers, is that existing methods incentivize robots to "rigidly track" a pre-defined reference motion. Any deviation is punished, so the robot learns to be as stiff as possible.

SoftMimic takes a different approach, described in a paper set to be released on arXiv. Instead of just training a policy on raw human motion data, the team first uses an "inverse kinematics solver" to generate a massive augmented dataset. This new dataset explicitly demonstrates how the robot should compliantly react to a wide range of external forces and user-specified stiffness settings.

The team then uses reinforcement learning (RL) to train a policy with a clever twist: the policy observes the original, non-compliant reference motion, but it is rewarded for matching the pre-computed compliant trajectory from the augmented dataset.

This setup forces the policy to learn to infer the external forces it's experiencing—using only its own proprioceptive sensors—and then react with the correct, "soft" behavior.

From Brittle to General-Purpose

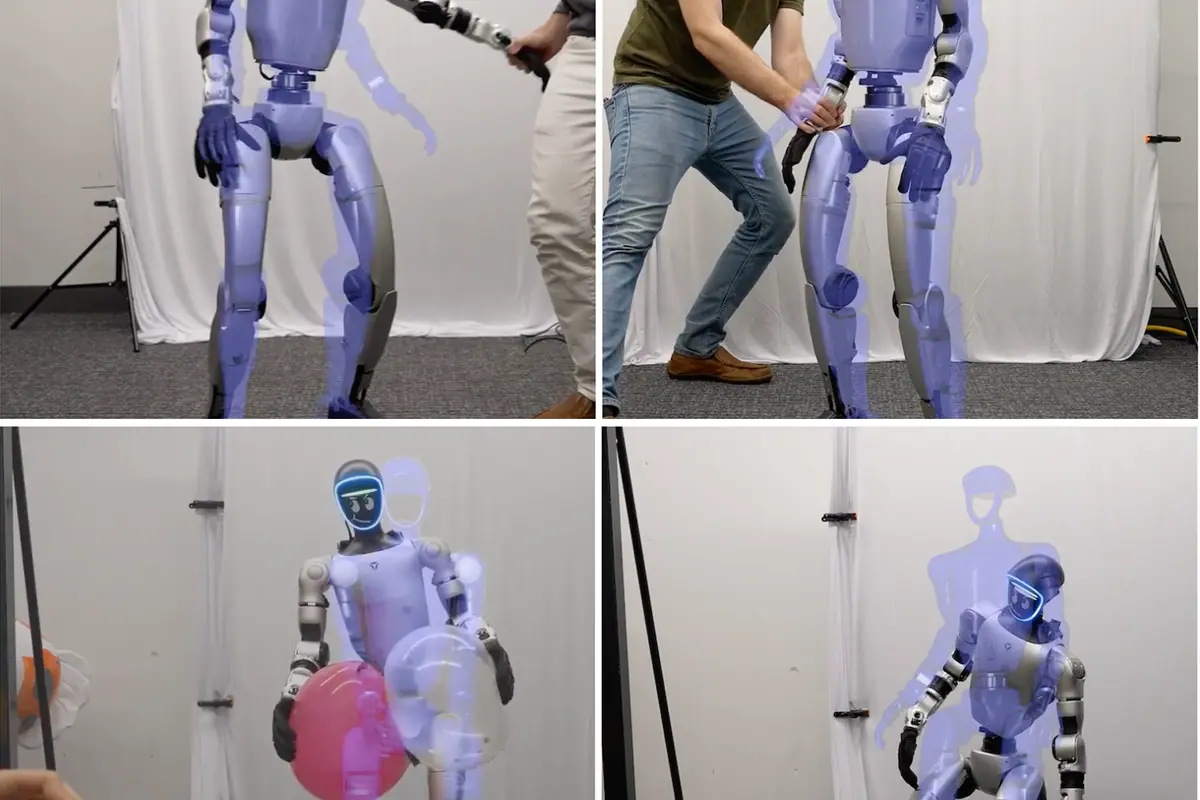

The results, demonstrated in simulations and on a real Unitree G1 humanoid, show a significant improvement in both safety and versatility.

A single SoftMimic policy can be commanded to be soft or stiff at deployment time. When set to a low stiffness, the robot can "absorb collisions," "interact gently" with a person, and "avoid damage".

This compliance also unlocks better generalization. The paper highlights an experiment where a robot uses a single motion reference (for picking up a 20cm box) to successfully pick up boxes of various widths. The compliant policy is able to adapt, applying a "gentle, consistent squeeze" to each one. A stiff baseline policy, in contrast, generates "large and uncontrolled force spikes" as the box size deviates, "risking damage to the object or robot".

In other tests involving unexpected contact, such as hitting a "misplaced box" or walking into an obstacle, the compliant policy reduced the maximum collision forces significantly compared to the stiff baseline.

A Softer Future for Humanoids

The SoftMimic framework addresses a fundamental barrier for robots operating "alongside people". As Pulkit Agrawal, head of the Improbable AI Lab, noted on X, "Current humanoids collapse when they touch the world... SoftMimic learns to deviate gracefully from motion references. It natively embraces contact and forces."

The researchers acknowledge open questions. A key next step is developing methods for the robot to "dynamically adjust" its own stiffness based on the task—for example, using high stiffness to lift a heavy object but low stiffness to hand an object to a person.

The team also suggests future work could involve improving the data augmentation process by incorporating dynamics (not just kinematics) or training a single "foundational compliant whole-body controller" on massive, diverse motion datasets.

Share this article

Stay Ahead in Humanoid Robotics

Get the latest developments, breakthroughs, and insights in humanoid robotics — delivered straight to your inbox.